I attended the AWS New York Summit at the Javits Center. It was a 2 day event that had some really interesting AWS service launches (SageMaker in particular) and some good updates on existing services I’ve used before (Rekognition, Polly and Lex). The biggest eye-catching story of course is how Fortnite runs on AWS managed services.

I also attended a number of really cool demos and learned a lot of interesting things during the key note. But the one I enjoyed the most was the hands on sessions for AWS Deep Lens. This was the first time I’ve seen or even heard of Deep Lens. I have played around with AWS Rekognition and Google Vision ML before so I know enough about how these things work to get into trouble.

The hands-on focused on how to use available algorithms from AWS SageMaker and use it in your AWS Deep Lens model. This would allow you to use pre-trained algorithms as a starting point for your projects. You can further train these models in SageMaker and refer to them from your Deep Lens project. You can also opt to import the algorithm into an S3 bucket. I am not sure what the implications are other than I guess it would be more cost effective this way because you only need to pay for the S3 storage after importing.

We tried out 2 different algorithms that are vision based. First was the object recognition algorithm that basically categorized objects and placed a confidence level for each object categorization. This algorithm was only trained to recognize 20 different objects. One of which was for recognizing hot dogs. Yes, hot dogs.

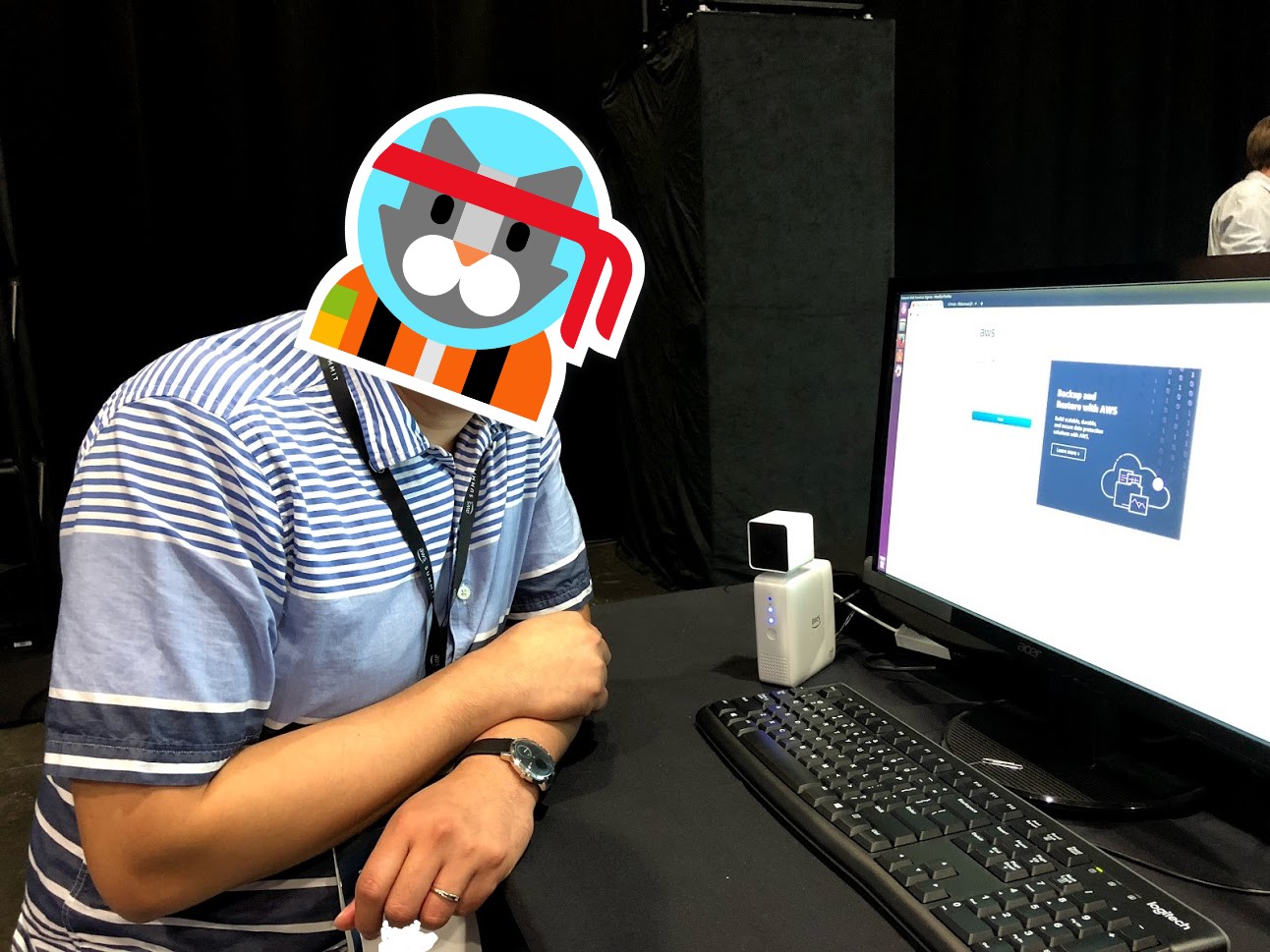

Second was a facial recognition algorithm that detected faces and assigned a confidence score indicating how sure it was that it saw a face. I didn’t have enough time to play around with it and the results we’re a bit off at times. It’s not very clear from the picture I took but it only has a 28.71% confidence score that my face, is a face. While the guy beside me got over 90% even though he was further away and only a side of his face was visible.

Another interesting feature of AWS Deep Lens is the built in deployment mechanism they have on the device. I believe it uses AWS IoT Greengrass under the covers. I really liked this simple and relatively fast way of deploying code to a device. This has always been a personal pain point for me in my projects.

The device itself is not really that noteworthy. It does look cool from a gadget standpoint but it was a PITA to setup. I do not know what it is running on or what hardware is inside. It was definitely not the fastest thing in the world and the camera is your standard web cam quality. I did do a quick amazon price check as I did want to own one of these to take it apart but it was a whopping $249!!! Why the hardware is not just a simple raspberry pi with a movidius chip or something similar is beyond me.